These AI Prompts Attracted Government Attention

In an era where artificial intelligence permeates daily life, from personal assistants to enterprise automation, the prompts we feed into AI systems can have far-reaching implications. Certain queries, whether intentional or accidental, can trigger scrutiny from regulatory bodies, raising concerns about ethics, security, and compliance.

This article explores the landscape of AI prompts that attract government attention, drawing on real-world examples, ethical frameworks, and future trends to provide a comprehensive guide for users, developers, and policymakers. By understanding these risks, we can foster safer AI interactions while harnessing its innovation potential.

Understanding AI Prompt Risks

AI prompts are the instructions given to models like ChatGPT or Grok to generate responses. While most are benign, some cross into sensitive territories, such as national security, privacy violations, or illegal activities. These “risky prompts” often involve queries that could be interpreted as attempts to circumvent laws or exploit vulnerabilities.

Common categories include those related to cybersecurity threats, controlled substances, or the weaponization of technology. For instance, prompts seeking detailed instructions on disallowed activities can flag systems for monitoring, as AI providers collaborate with governments to prevent misuse.

(Insert infographic: Common categories of risky prompts)

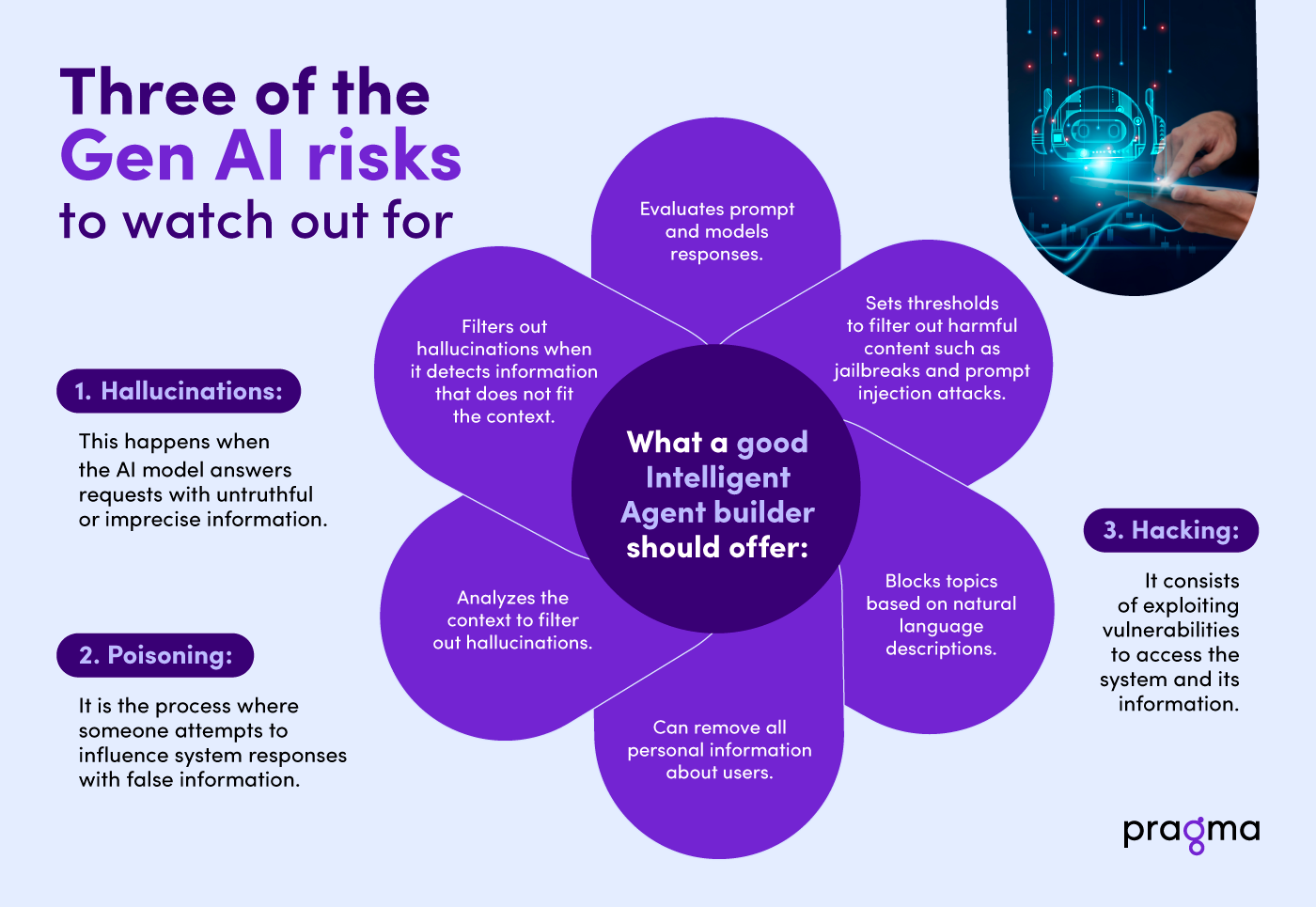

As “intelligent agents” become more prevalent, it’s crucial to be aware of the potential impact of GenAI.

According to UNESCO’s Recommendation on the Ethics of Artificial Intelligence, prompts must align with human rights and ethical standards to avoid unintended consequences.

Why Certain Prompts Attract Scrutiny

Governments monitor AI interactions to safeguard public interest, particularly in areas like terrorism prevention, cyber defense, and data privacy. The guide from the Alan Turing Institute on AI ethics emphasizes that prompts implying harm or illegal intent can lead to automated flags, user bans, or even legal investigations.

Legal and Ethical Boundaries

Laws such as the EU’s AI Act and U.S. executive orders on AI safety set boundaries for high-risk applications. Prompts that probe these—e.g., simulating cyberattacks or generating deepfakes for misinformation—can attract attention from agencies like the FBI or NSA.

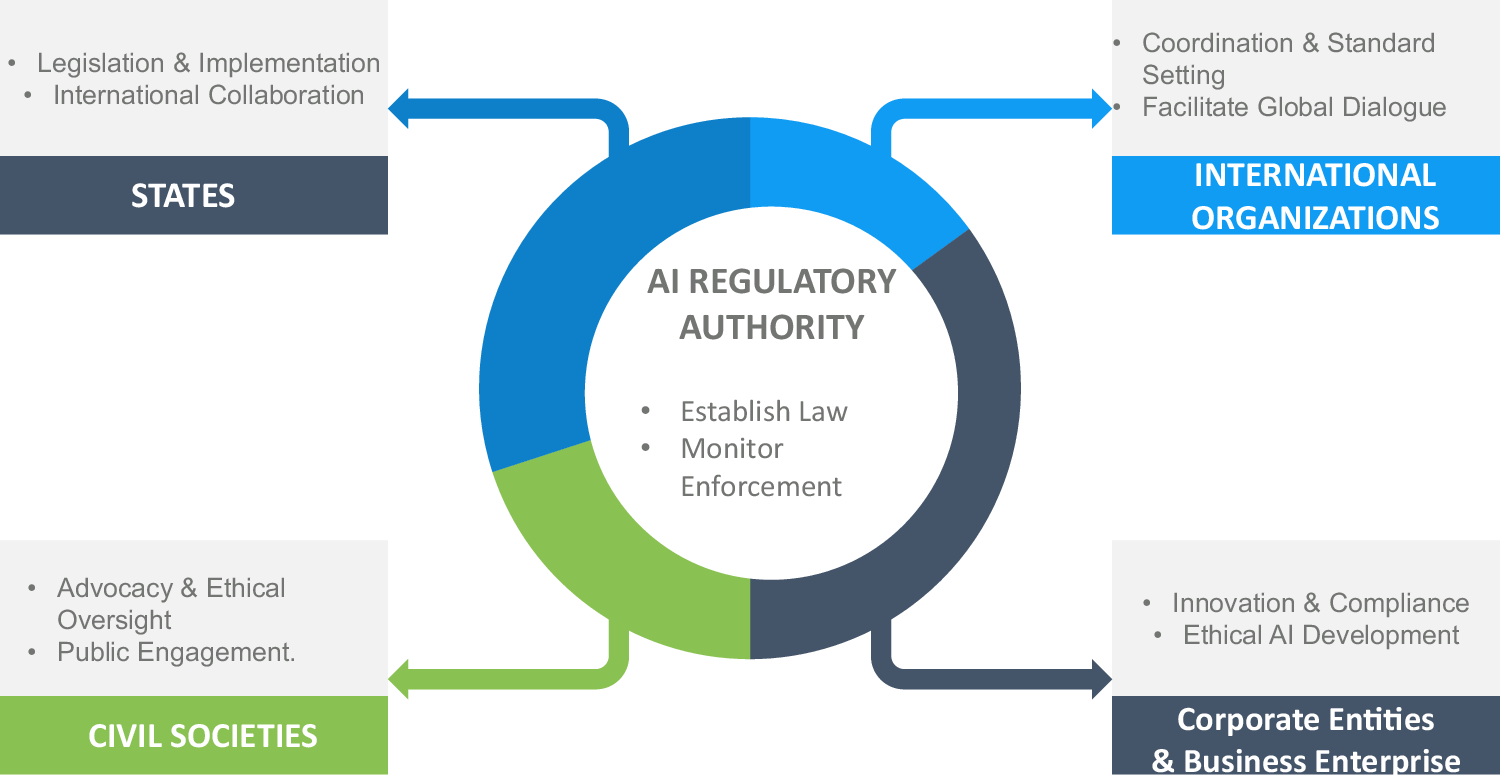

Government Monitoring of AI Usage

AI ecosystems involve layers of oversight, from model providers to regulatory bodies. Governments may require transparency reports from companies like OpenAI or xAI that track flagged prompts to identify patterns of abuse.

(Insert diagram: AI ecosystem with government oversight layers)

AI Governance in a Complex and Rapidly Changing Regulatory …

Examples of Attention-Attracting Prompts

We avoid specific actionable prompts here to prevent misuse, but we do include the following high-level categories:

- Security and Hacking Related: Queries about exploiting systems, even hypothetically, can mirror social engineering tactics warned against by the FBI.

- Substance and Weapon Queries: Requests for synthesizing controlled materials, as discussed in AI safety literature from Tigera, highlight risks of real-world harm.

Platform reports show that users frequently flag such prompts, which vary in type depending on their intent.

(Insert chart: Frequency of flagged prompt types based on reports)

The figures illustrate the performance of various AI text generation models in response to different types of prompts.

How AI Companies Handle Such Prompts

AI providers implement safety layers to detect and decline risky queries. For example, models are trained on guidelines like those from the European Commission’s Ethics Guidelines for Trustworthy AI, which mandate robustness against harmful inputs.

Safety Instructions and Filters

Built-in filters redirect or refuse responses, promoting ethical use. A typical declined response might explain the refusal while suggesting alternatives.

(Insert screenshot-style mockup: AI response declining a risky query)

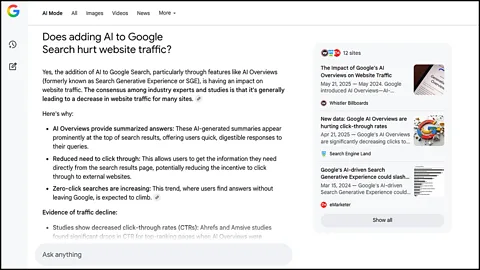

Is Google about to destroy the web?

Best Practices for Safe Prompting

To avoid attracting unwanted attention, adhere to ethical guidelines. The University of Iowa’s guidelines for secure AI use recommend verifying sources and limiting sensitive data.

Ethical Guidelines

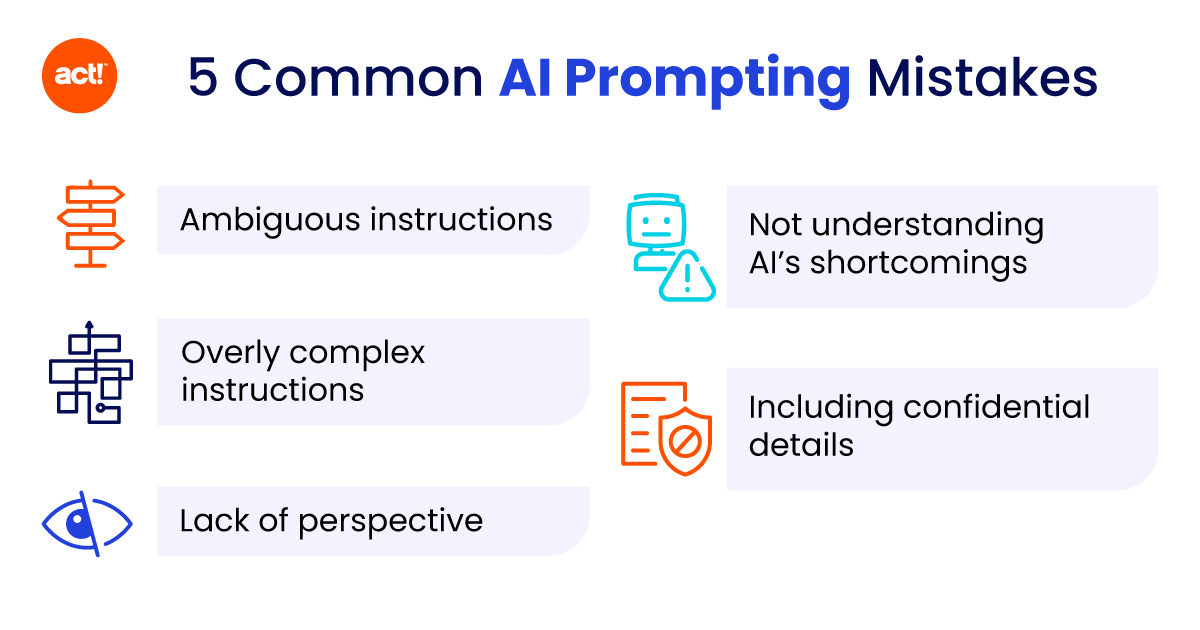

Focus on constructive queries: Use AI for innovation, not exploitation. Avoid ambiguity that could be misinterpreted.

(Insert infographic: Do’s and Don’ts of AI Prompting)

5 Common AI Prompting Mistakes and How to Avoid Them

Future of AI Regulation

As AI evolves, regulations will tighten. The National Conference of State Legislatures tracks bills addressing AI transparency and bias. Future-facing examples include:

- Multi-agent AI Automation: Systems orchestrating tasks across agents could raise coordination concerns if used for surveillance.

- On-Device WASM Machine Learning: WebAssembly enables edge ML, but privacy risks in IoT demand new laws, as per Stanford HAI discussions.

- Privacy-First Generative Apps: Tools emphasizing data protection, yet with potential for misuse in encrypted communications.

- Edge-Driven IoT Systems: Decentralized networks may evade central oversight, prompting updates to laws like the U.S. AI executive order.

(Insert timeline: Key milestones in AI regulation)

The History of Artificial Intelligence: Key Milestones in AI

The Bipartisan Policy Center outlines considerations for future governance, including pauses on high-risk deployments.

Conclusion

Navigating AI prompts effectively requires a strong awareness of the potential risks involved as well as a firm commitment to maintaining high ethical standards. By diligently following established best practices and continuously staying informed about the latest regulations and guidelines, users can confidently leverage AI technologies responsibly and beneficially.

For those seeking more profound and comprehensive insights, it is highly recommended to explore valuable resources such as MDN Web Docs, which offers detailed information on various web technologies, or IEEE, which provides authoritative guidance on AI standards and ethical frameworks.

20 primary keywords: AI prompts, government attention, risky AI queries, AI ethics, AI safety guidelines, prompt risks, AI regulation, ethical AI use, flagged prompts, AI oversight, multiagent AI, WASM machine learning, privacy generative apps, edge IoT systems, AI laws 2025, UNESCO AI ethics, Turing AI guide, EU AI Act, FBI AI warnings, safe prompting